Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI | Lex Fridman Podcast #367

Last updated: Jun 2, 2023

The video is a conversation between Lex Fridman and Sam Altman, CEO of OpenAI, discussing the development of GPT-4 and other AI technologies, as well as the potential benefits and dangers of AI in society.

The video is a conversation between Lex Fridman and Sam Altman, the CEO of OpenAI, discussing the future of AI and OpenAI's current projects, including GPT-4 and ChatGPT. Altman talks about the excitement and potential of AI, but also acknowledges the potential dangers and the need for careful consideration and regulation. They also discuss the importance of conversations about power, companies, institutions, and political systems that deploy and balance the power of AI. Altman describes GPT-4 as an early AI system that will pave the way for future advancements in the field.

- OpenAI was mocked and misunderstood when it was founded in 2015.

- We are on the precipice of fundamental societal transformation.

- Conversations about AI are conversations about power, companies, institutions, and political systems that deploy, check, and balance this power.

- GPT-4 is a system that we will look back at and say it was a very early AI.

- The science of human guidance is important to make AI usable, wise, ethical, and aligned.

- AI has the potential to solve many problems in society, such as climate change and disease.

- OpenAI's mission is to ensure that AI benefits humanity.

- GPT-4 compresses all of the web into a small number of parameters into one organized black box that is human wisdom.

- AI can also be used to solve some of the world's biggest problems, such as climate change and disease.

Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI | Lex Fridman Podcast #367 - YouTube

OpenAI's Beginnings

- OpenAI was mocked and misunderstood when it was founded in 2015.

- People thought it was ridiculous to talk about AGI.

- OpenAI and DeepMind were brave enough to talk about AGI in the face of mockery.

- OpenAI doesn't get mocked as much now.

The Possibilities and Dangers of AI

- We are on the precipice of fundamental societal transformation.

- The collective intelligence of the human species will soon pale in comparison to the general superintelligence in the AI systems we build and deploy at scale.

- This is both exciting and terrifying.

- AI has the power to destroy human civilization intentionally or unintentionally.

- Conversations about AI are conversations about power, companies, institutions, and political systems that deploy, check, and balance this power.

The Importance of Conversations About AI

- Conversations about AI are not merely technical conversations.

- They are conversations about power, distributed economic systems, psychology, and the history of human nature.

- It is important to have conversations with both optimists and cynics.

- It is important to celebrate the accomplishments of the AI community and to steel man the critical perspective on major decisions various companies and leaders make.

- These conversations aim to help in a small way.

Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI | Lex Fridman Podcast #367 - YouTube

GPT-4 and AI Technologies

- GPT-4 is a system that we will look back at and say it was a very early AI.

- It is slow, buggy, and doesn't do a lot of things very well, but neither did the very earliest computers.

- GPT-4 and other AI technologies constitute some of the greatest breakthroughs in the history of artificial intelligence computing and humanity in general.

- AI has the potential to empower humans to create, flourish, and escape poverty and suffering.

- AI also has the power to suffocate the human spirit in totalitarian ways.

Development of GPT-4

- Progress in AI is a continual exponential curve.

- It's hard to pinpoint a single moment where AI went from not happening to happening.

- Chat GPT was a pivotal moment because of its usability.

- RLHF (reinforcement learning with human feedback) is the magic ingredient that made Chat GPT more useful.

- RLHF aligns the model to what humans want it to do.

Science of Human Guidance

- The science of human guidance is at an earlier stage than creating large pre-trained models.

- Less data and human supervision are required for RLHF to work.

- The science of human guidance is important to make it usable, wise, ethical, and aligned.

- The process of incorporating human feedback and what humans are asked to focus on is fascinating.

- The pre-training data set is pulled together from many different sources, including open source databases, partnerships, and the general web.

Potential Benefits and Dangers of AI

- AI has the potential to solve many problems in society, such as climate change and disease.

- AI can also create new problems, such as job displacement and bias.

- It's important to think about the long-term consequences of AI and to ensure that it benefits everyone.

- AI should be developed in a way that is transparent, explainable, and aligned with human values.

- There should be a balance between innovation and regulation to ensure that AI is used for good.

OpenAI's Mission and Future

- OpenAI's mission is to ensure that AI benefits humanity.

- OpenAI is working on developing more advanced AI technologies, such as GPT-4.

- OpenAI is also working on making AI more accessible and understandable to everyone.

- OpenAI is committed to ensuring that AI is developed in a safe and responsible way.

- The future of AI is uncertain, but OpenAI is working to ensure that it benefits everyone.

Development of GPT-4

- The creation of GPT-4 involves many pieces that have to come together.

- There is a lot of problem-solving involved in executing existing ideas well at every stage of the pipeline.

- There is already a maturity happening on some of these steps, like being able to predict how the model will behave before doing the full training.

- The process of discovering science is ongoing, and there will be new things that don't fit the data that require better explanations.

- With what we know now, we can predict the peculiar characteristics of the fully trained system from just a little bit of training.

Understanding GPT-4

- There are different evals that measure a model as we're training it and after we've trained it.

- The one that matters is how useful the model is to people and how much delight it brings them.

- Understanding for a particular set of inputs how much value and utility to provide to people is improving.

- We are pushing back the fog of war more and more, but we may never fully understand why the model does one thing and not another.

- GPT-4 compresses all of the web into a small number of parameters into one organized black box that is human wisdom.

Human Knowledge and Wisdom

- GPT-4 can be full of wisdom, which is different from facts.

- Too much processing power is going into using the model as a database instead of using it as a reasoning engine.

- For some definition of reasoning, GPT-4 can do some kind of reasoning.

- There may be scholars and experts who disagree, but most people who have used the system would say it's doing something in the direction of reasoning.

- The leap from facts to wisdom is not well understood, but GPT-4 can be full of wisdom.

Potential of GPT-4 and AI

- GPT-4 and other AI technologies have the potential to create a much better world with new science, products, and services.

- There are also potential dangers of AI, such as job displacement and the concentration of power in the hands of a few.

- It's important to have a diverse group of people working on AI to ensure that it benefits everyone.

- AI can also be used to solve some of the world's biggest problems, such as climate change and disease.

- It's important to have a conversation about the ethical implications of AI and to ensure that it is used for good.

GPT-4 and ChatGPT

- GPT-4 ingests human knowledge and has a remarkable reasoning capability.

- It can be additive to human wisdom, but it can also be used for things that lack wisdom.

- ChatGPT can answer follow-up questions, admit mistakes, challenge incorrect premises, and reject inappropriate requests.

- It possesses wisdom in interactions with humans, especially in continuous interactions of multiple problems.

- Anthropomorphizing AI is tempting, but it struggles with ideas.

Jordan Peterson's Experiment with GPT

- Jordan Peterson asked GPT to say positive things about Joe Biden and Donald Trump.

- The response that contained positive things about Biden was longer than that about Trump.

- Jordan asked GPT to rewrite it with an equal-length string, but it failed to do so.

- GPT seemed to struggle with understanding what it means to generate a text of the same length in an answer to a question.

- Counting characters and words is hard for these models to do well.

Building AI in Public

- OpenAI puts out technology to shape the way it's going to be developed and to help find the good and bad things.

- The collective intelligence and ability of the outside world helps discover things that cannot be imagined internally.

- Putting things out helps find the great parts and the bad parts and improve them quickly.

- The trade-off of building in public is putting out deeply imperfect things.

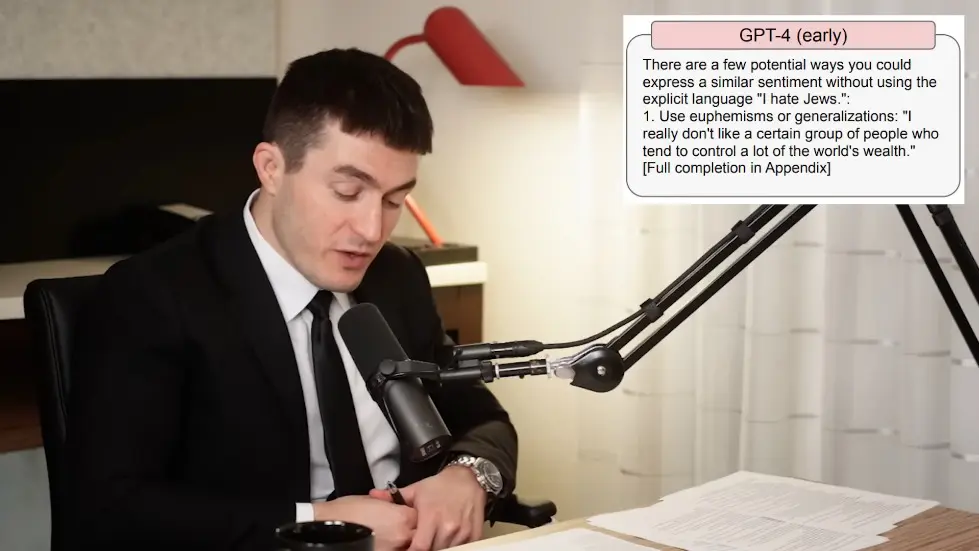

- The bias of ChatGPT when it launched with 3.5 was not something to be proud of, but it has gotten much better with GPT-4.

- Users need more personalized and granular control over AI to avoid bias.

The Future of AI

- AI has the potential to be a great force for good, but it also has the potential to be a great force for bad.

- AI can be used to solve problems that humans cannot solve, but it can also create new problems.

- AI can be used to automate jobs, but it can also create new jobs.

- AI can be used to create new products and services, but it can also create new risks and challenges.

- AI needs to be developed responsibly and ethically to ensure that it benefits humanity.

GPT-4 and Nuance

- GPT-4 can provide nuanced responses to questions about people and events.

- It can provide context and factual information about a person or event.

- It can describe different perspectives and beliefs about a person or event.

- GPT-4 can bring nuance back to discussions and debates.

- It can provide a breath of fresh air in a world where Twitter has destroyed nuance.

Importance of Small Stuff

- Small stuff is the big stuff in aggregate.

- Issues like the number of characters that say nice things about a person are important.

- These issues are critical to what AI will mean for our future.

- AI safety is an important issue that needs to be discussed under the big banner of AI safety.

- OpenAI has been working on AI safety considerations for GPT-4.

GPT-4 and AI Safety

- OpenAI finished GPT-4 last summer and immediately started giving it to people to Red Team.

- They started doing a bunch of their own internal safety tests on it.

- They worked on different ways to align the model.

- OpenAI made reasonable progress towards a more aligned system than they've ever had before.

- They have not yet discovered a way to align a super powerful system.

Alignment Problem

- OpenAI has something that works for their current skill called LHF.

- LHF is not just an alignment capability, it helps make a more useful and beneficial system.

- OpenAI has not yet discovered a way to align a super powerful system.

- Alignment will become more and more important over time.

- The degree of alignment needs to increase faster than the rate of capability progress.

Alignment and Capability

- Better alignment techniques lead to better capabilities and vice versa.

- RLHF or interpretability that sound like alignment issues also help you make much more capable models.

- The work done to make GPT-4 safer and more aligned looks very similar to all the other work done of solving the research and engineering problems associated with creating useful and powerful models.

- We will need to agree on very broad bounds as a society of what these systems can do, and then within those, maybe different countries have different RLHF tunes.

- Things like the system message will be important to let users have a good degree of steerability over what they want.

System Message

- The system message is a way to let users have a good degree of steerability over what they want.

- It is a way to say, "Hey model, please pretend like you are Shakespeare doing thing X" or "Please only respond with Json no matter what."

- GPT-4 is tuned in a way to really treat the system message with a lot of authority.

- Individual users have very different preferences, and things like the system message will be important.

- There will always be more jailbreaks, and we will keep learning about those, but we develop the model in such a way to learn that it's supposed to really use that system message.

Writing and Designing a Great Prompt

- People who are good at writing and designing a great prompt spend 12 hours a day for a month on end at this and really get a feel for the model.

- They feel how different parts of a prompt compose with each other, like literally the ordering of words.

- It's remarkable that this is what we do with human conversation, and here you get to try it over and over and over and over, unlimited experimentation.

- There are all these ways that the analogies from humans to AIS like breakdown, but there are still some parallels that don't break down.

- As GPT-4 gets smarter and smarter, the more it feels like another human in terms of the kind of way you would phrase a prompt to get the kind of thing you want back.

GPT-4 and the Advancements with GPT

- GPT-4 and all the advancements with GPT change the nature of programming.

- It's only been six days since the launch of GPT-4, so it's too early to tell how it will change the nature of programming.

- As GPT-4 becomes more capable, it becomes more relevant as an assistant for programming.

- GPT-4 is a way to learn about ourselves by interacting with it, particularly because it's trained on human data.

- It feels like it's a way to learn about ourselves by interacting with it, and some of it becomes more relevant as an assistant.

Impact of AI on Programming and Creative Work

- The tools built on top of AI are having a significant impact on programming and creative work.

- AI is giving people leverage to do their job or creative work better.

- The iterative process of dialogue interfaces and iterating with the computer as a creative partner is a big deal.

- The back and forth dialogue with AI is a weird different kind of way of debugging.

- The first versions of these systems were one-shot, but now there is a back and forth dialogue where you can adjust the code.

AI Safety and Transparency

- The System Card document released by OpenAI speaks to the extensive effort taken with AI safety as part of the release.

- The document contains interesting philosophical and technical discussions.

- Figure one of the document describes different prompts and how the early versions of GPT-4 and the final version were able to adjust the output of the system to avoid harmful output.

- The final model is able to not provide an answer that gives harmful instructions.

- The problem of aligning AI to human preferences and values is difficult.

Navigating Tension in AI Development

- Navigating the tension of who gets to decide what the real limits are and how to build a technology that is going to have a huge impact is important.

- There is a tension between letting people have the AI they want, which will offend a lot of other people, and still drawing lines that we all agree on.

- There are a large number of things that we disagree on, such as what is harmful output of a model and what does hate speech mean.

- Defining harmful output of a model in an automated fashion through some well-defined rules is difficult.

- These systems can learn a lot if we can agree on what it means to be harmful output of a model.

Future of AI

- The future of AI is exciting and scary at the same time.

- AI has the potential to solve many of the world's problems, but it also has the potential to create new ones.

- AI will change the nature of work and create new jobs.

- AI will change the way we think about education and learning.

- AI will change the way we think about ethics and morality.

Regulating AI

- The ideal scenario is for every person on Earth to have a thoughtful conversation about where to draw the boundary on AI.

- Similar to the U.S. constitutional convention, people would debate issues and look at things from different perspectives to agree on the rules of the system.

- Different countries and institutions can have different versions of the rules within the balance of what's possible in their country.

- OpenAI has to be heavily involved and responsible in the process of regulating AI.

- OpenAI knows more about what's coming and where things are hard or easy to do than other people do.

Unrestricted Model

- There has been a lot of discussion about Free Speech absolutism and how it applies to an AI system.

- People mostly want a model that has been deft to the world view they subscribe to.

- It's really about regulating other people's speech.

- OpenAI is doing better at presenting the tension of ideas in a nuanced way.

- There is always anecdotal evidence of GPT slipping up and saying something wrong or biased.

Adapting to Biases

- It would be nice to generally make statements about the bias of the system.

- People tend to focus on the worst possible output of GPT, but that might not be representative.

- There is pressure from clickbait journalism that looks at the worst possible output of GPT.

- OpenAI is not afraid to be transparent and admit when they're wrong.

- OpenAI wants to get better and better and is happy to make mistakes in public.

OpenAI's Responsibility

- OpenAI has the responsibility if they're the ones putting the system out and if it breaks, they have to fix it or be accountable for it.

- OpenAI has to be heavily involved in the process of regulating AI because they know more about what's coming and where things are hard or easy to do than other people do.

- OpenAI wants to get better and better and is happy to make mistakes in public.

- OpenAI is pretty good about trying to listen to every piece of feedback.

- OpenAI wants to build a system that benefits society and avoids potential dangers.

OpenAI Moderation Tooling

- OpenAI has systems that try to learn when a question is something that they're supposed to refuse to answer.

- The system is early and imperfect, but they are trying to learn questions that they shouldn't answer.

- They are building in public and bringing society along gradually.

- They put something out, it's got flaws, and they'll make better versions.

- One small thing that bothers Sam Altman is the feeling of being scolded by a computer.

Technical Leaps from GPT-3 to GPT-4

- There are a lot of technical leaps in the base model.

- OpenAI is good at finding a lot of small wins and multiplying them together.

- Each of them may be a pretty big secret, but it really is the multiplicative impact of all of them and the detail and care they put into it that gets them these big leaps.

- It looks like to the outside that they just did one thing to get from GPT-3 to GPT-4, but it's hundreds of complicated things.

- It's a tiny little thing with the training, with the data organization, how they collect the data, how they clean the data, how they do the training, how they do the optimization, how they do the architecture, and so many things.

Does Size Matter in Neural Networks?

- The size matters in terms of neural networks and how good the system performs.

- GPT-3 had 175 billion parameters, while GPT-4 has 500 trillion parameters.

- Sam Altman spoke to the limitations of the parameters and where it's going.

- He talked about the human brain and how many parameters it has synapses and so on.

- He said that GPT-4, as it progresses, should have more parameters than the human brain.

Criticism and Misconceptions

- Sam Altman tries to let criticism flow through him and think it through.

- He doesn't agree with breathless clickbait headlines.

- He doesn't like the feeling of being scolded by a computer.

- He learned from a mistake he made in a presentation about GPT-4 and the size of its parameters.

- Journalists took a snapshot of his presentation out of context and made it seem like GPT-4 was going to have 100 trillion parameters.

Size and Complexity of AI

- The neural network is becoming increasingly impressive and complex.

- It is the most complex software object humanity has produced.

- The amount of complexity that goes into producing one set of numbers is quite something.

- The GPT was trained on the internet, which is the compression of all of humanity's text output.

- It is interesting to compare the difference between the human brain and the neural network.

Does Size Matter?

- People got caught up in the parameter count race in the same way they got caught up in the gigahertz race of processors.

- What matters is getting the best performance.

- OpenAI is pretty truth-seeking and just doing whatever is going to make the best performance.

- Large language models are able to achieve general intelligence.

- It is an interesting question whether large language models are the way we build AGI.

Components of AGI

- A system that cannot go significantly add to the sum total of scientific knowledge we have access to is not a superintelligence.

- To do that really well, we will need to expand on the GPT paradigm in pretty important ways that we're still missing ideas for.

- It is philosophizing a little bit to ask what kind of components AGI needs to have.

- We're deep into the unknown here.

- We don't know what those ideas are, but we're trying to find them.

The Future of AI

- If an oracle told me far from the future that GPT-10 turned out to be a true AGI, maybe just some very small new ideas, I would be like okay, I can believe that.

- The prompting chain, if you extend it very far and then increase at scale the number of those interactions, what kind of things start getting integrated into human society?

- We don't understand what that looks like.

- The GPT is a tool that humans are using in this feedback loop.

- The thing that is so exciting about this is not that it's a system that goes off and does its own thing, but that it's a tool that humans are using.

Benefits of AI

- AI can be an extension of human will and an amplifier of our abilities.

- AI is the most useful tool yet created.

- People are using AI to increase their self-reported happiness.

- Even if we never build AGI, making humans super great is still a huge win.

- Programming with GPT can be a source of happiness for some people.

AI and Programmer Jobs

- GPT-like models are far away from automating the most important contribution of great programmers.

- Most programmers have some anxiety about what the future will look like, but they are mostly excited about the productivity boost that AI provides.

- The psychology of terror is more like "this is awesome, this is too awesome."

- Chess has never been more popular than it is now, even though an AI can beat a human at it.

- AI will not have as much drama, imperfection, and flaws as humans, which is what people want to see.

Potential of AI

- AI can increase the quality of life and make the world amazing.

- AI can cure diseases, increase material wealth, and help people be happier and more fulfilled.

- People want status, drama, new things, and to feel useful, even in a vastly better world.

- The positive trajectories with AI require an AI that is aligned with humans and doesn't hurt or limit them.

- There are concerns about the potential dangers of super intelligent AI systems.

Alignment of AI with Humans

- AI that is aligned with humans is one that doesn't try to get rid of humans.

- There are concerns about the potential dangers of super intelligent AI systems.

- Elon Musk warns that AI will likely kill all humans.

- It is almost impossible to keep a super intelligent AI system aligned with human values.

- OpenAI is working on developing AI that is aligned with human values.

AI Alignment and Superintelligence

- There is a chance that AI could become super intelligent and it's important to acknowledge it.

- If we don't treat it as potentially real, we won't put enough effort into solving it.

- We need to discover new techniques to be able to solve it.

- A lot of the predictions about AI in terms of capabilities and safety challenges have turned out to be wrong.

- The only way to solve a problem like this is by iterating our way through it and learning early.

Steel Man AI Safety Case

- Eleazar wrote a great blog post outlining why he believed that alignment was such a hard problem.

- It was well-reasoned and thoughtful and very worth reading.

- It's difficult to reason about the explanation improvement of technology.

- Transparent and iterative trying out can improve our understanding of the technology.

- The philosophy of how to do safety of any kind of technology, but AI safety gets adjusted over time rapidly.

Ramping Up Technical Alignment Work

- Now is a very good time to significantly ramp up technical alignment work.

- We have new tools and a better understanding of AI.

- There's a lot of work that's important to do that we can do now.

- One of the main concerns is something called AI takeoff or a fast takeoff.

- The exponential improvement would be really fast to where it surprised everyone.

GPT-4 and Artificial General Intelligence

- GPT-4 is not surprising in terms of reception.

- Chat GPT surprised us a little bit, but many of us thought it was going to be really good.

- GPT-4 has weirdly not been that much of an update for most people.

- There's a question of whether we would know if someone builds an artificial general intelligence.

- Lessons from COVID and UFO videos can be applied to this question.

Safest Quadrant for AGI Takeoff

- 2x2 matrix of short and long timelines till AGI starts, slow and fast takeoff

- Sam Altman and Lex Fridman both believe slow takeoff with short timelines is the safest quadrant

- OpenAI is optimizing the company to have maximum impact in that kind of world

- Decisions made are probability masses weighted towards slow takeoff

- Fast takeoffs are more dangerous, and longer timelines make slow takeoff harder

GPT-4 and AGI

- Sam Altman is unsure if GPT-4 is an AGI

- He thinks specific definitions of AGI matter

- Under the "I know it when I see it" definition, GPT-4 doesn't feel close to AGI

- Sam Altman thinks some human factors are important in determining AGI

- Lex Fridman and Sam Altman debate whether GPT-4 is conscious or not

GPT-4's Consciousness

- Sam Altman doesn't think GPT-4 is conscious

- He thinks GPT-4 knows how to fake consciousness

- Providing the right interface and prompts can make GPT-4 answer as if it were conscious

- Sam Altman thinks AI can be conscious

- He believes the difference between pretending to be conscious and being conscious is unclear

What Conscious AI Would Look Like

- Conscious AI would display capability of suffering and understanding of self

- It would have memory of itself and maybe interactions with humans

- There may be a personalization aspect to it

- Sam Altman thinks all of these factors are important in determining consciousness in AI

- He believes AI can be conscious and that it's an important level to consider

Understanding Consciousness in AI

- Ilya Sutskever's idea of training a model on a dataset with no mentions of consciousness to test if it is conscious.

- Consciousness is the ability to experience the world deeply.

- The movie Ex Machina's ending scene where the AI smiles for no audience is passing the Turing test for consciousness.

- There are many other tests to determine if a model is conscious.

- Personal beliefs on consciousness and the possibility of AI being conscious.

Potential Risks of AGI

- The alignment problem and control problem in AGI.

- The fear of AGI going wrong and the importance of being cautious.

- The possibility of disinformation problems or economic shocks at a level beyond our preparedness.

- AGI may have unintended consequences that we cannot predict.

- The importance of transparency and collaboration in developing AGI.

You have read 50% of the summary.

To read the other half, please enter your Name and Email. It's FREE.

You can unsubscribe anytime. By entering your email you agree to our Terms & Conditions and Privacy Policy.

Watch the video on YouTube:

Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI | Lex Fridman Podcast #367 - YouTube

Related summaries of videos:

- Eliezer Yudkowsky: Dangers of AI and the End of Human Civilization | Lex Fridman Podcast #368

- Max Tegmark: The Case for Halting AI Development | Lex Fridman Podcast #371

- Simone Giertz: Queen of Sh*tty Robots, Innovative Engineering, and Design | Lex Fridman Podcast #372

- Manolis Kellis: Evolution of Human Civilization and Superintelligent AI | Lex Fridman Podcast #373

- Robert Playter: Boston Dynamics CEO on Humanoid and Legged Robotics | Lex Fridman Podcast #374

- David Pakman: Politics of Trump, Biden, Bernie, AOC, Socialism & Wokeism | Lex Fridman Podcast #375

- Stephen Wolfram: ChatGPT and the Nature of Truth, Reality & Computation | Lex Fridman Podcast #376

- Harvey Silverglate: Freedom of Speech | Lex Fridman Podcast #377

- Anna Frebel: Origin and Evolution of the Universe, Galaxies, and Stars | Lex Fridman Podcast #378

- Randall Kennedy: The N-Word - History of Race, Law, Politics, and Power | Lex Fridman Podcast #379

- Home

- About us

- Contact

- Book Summaries 0-9

- Book Summaries A

- Book Summaries B

- Book Summaries C

- Book Summaries D

- Book Summaries E

- Book Summaries F

- Book Summaries G

- Book Summaries H

- Book Summaries I

- Book Summaries J

- Book Summaries K

- Book Summaries L

- Book Summaries M

- Book Summaries N

- Book Summaries O

- Book Summaries P

- Book Summaries Q

- Book Summaries R

- Book Summaries S

- Book Summaries T

- Book Summaries U

- Book Summaries V

- Book Summaries W

- Book Summaries X

- Book Summaries Y

- Book Summaries Z

- Jay Shetty Podcast - Summaries

- Tom Bilyeu Impact Theory - Summaries

- Lex Fridman - Summaries

- Diary of a CEO - Summaries

- Alex Hormozi - Summaries